Policy-driven, automated compliance enforces zero-trust security principles

Identity Access Management Tools for Banks and Credit Unions

Community banks and credit unions manage hundreds of systems. When employees are hired, change roles, or when permissions evolve, it requires manual work to keep these systems updated.

Provision® IAM was specifically designed to meet the unique needs of banks and credit unions. Provision automates the tedious tasks of user access management, which frees your employees to focus on more important things, while providing increased security, and greatly reducing the time required for audit prep.

(Identity access management can be a complicated concept. Need a refresher? What is IAM)

Provision® Is the IAM Built Specifically for Financial Institutions

Cloud-based SaaS means no software to install and manage on premise

Single source of record for internal and external audits

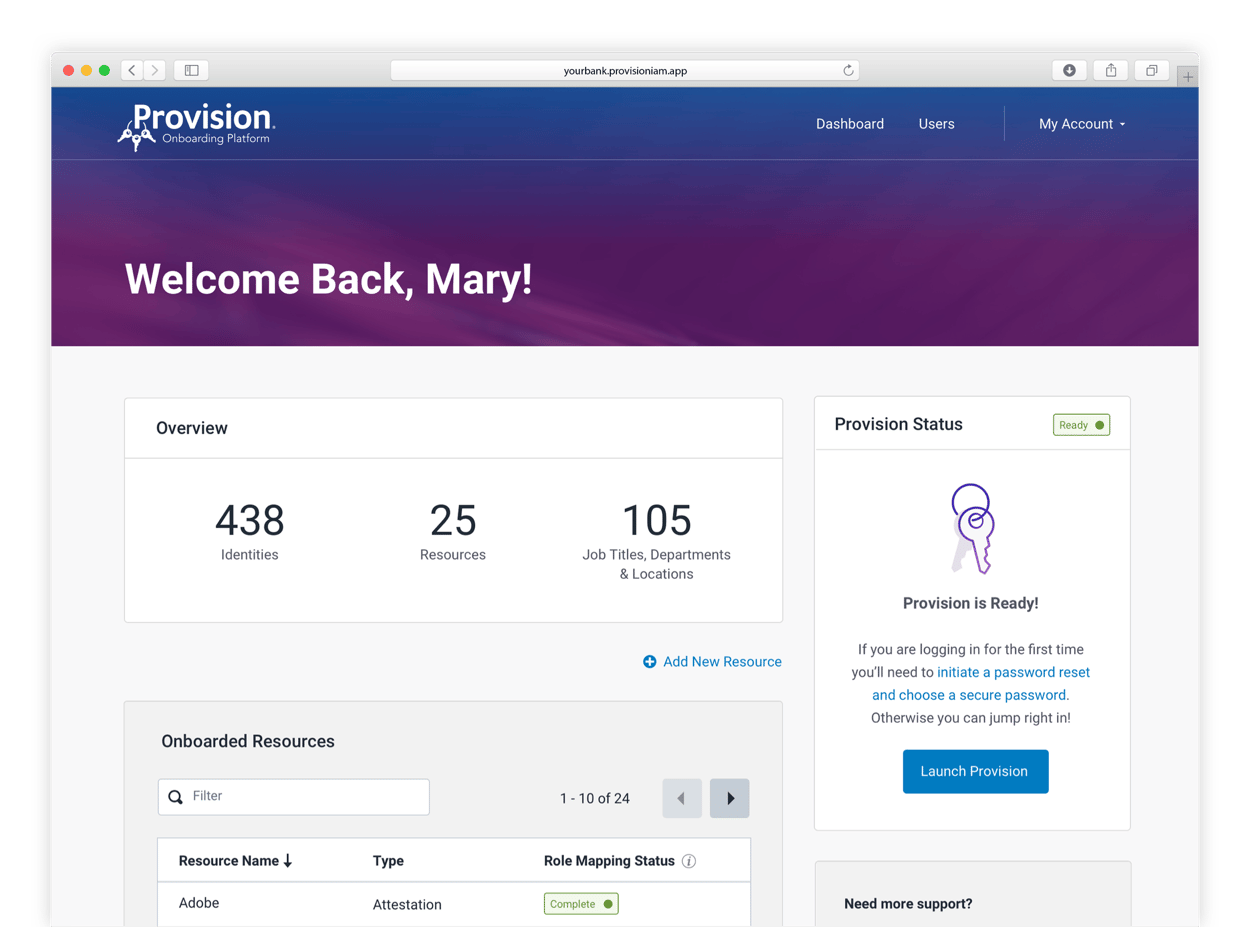

Step-by-step Onboarding tool with bank-friendly terminology

Implement on your own schedule, add new systems on demand

Flexible reporting capabilities with complete data set—no sampled data

Growing library of Banking and Business Management connectors

Connect to virtually any system, even legacy. Full-APIs not required!

Connect with Major Banking Cores, Including:

Are You At Risk?

-

Spreadsheets and legacy systems used to track permissions leave security holes for hackers

-

53% of security breaches occur within the institution

-

The cost to remediate averages $8.19M per incident

Provision® IAM Mitigates Security Risks

-

Permissions immediately and automatically update based on your policies

-

Ensures employees have minimal privileges required

-

Automatically eliminates system access for terminated employees

Be Proactive

with Provision® IAM

-

Our self-guided onboarding platform means you can get up and running faster

-

No other IAM platform affordably integrates your systems into an automated, centralized solution

-

Manages users and their unique permissions through built-in workflows

-

Actively documents changes in a well-structured audit log

-

Ensures compliance regulations are being met across all systems

Case Studies

The right ideas, technology, execution, and people can create something special. Here are just a few examples of how our strategic thinking has helped clients and brands around the world to accomplish their goals:

.png?width=750&height=750&name=Untitled%20design%20(32).png)